Putting On A Face or Two

Our local hackerspace is Heatsync Labs which is a member/donation funded nonprofit, based in downtown Mesa, and established in 2009. The lab is filled with power tools, learning events, and helpful people. Its definitely the place to be weekday evenings where it is open to the public to build art and engineering marvels. That said, the display cases could use some more interactivity for people walking by.

We decided to start refurbishing this area with an internet of things smorgasbord. The first of our many internet of things implementations to be placed there is a mannequin with a mounted tablet on its head displaying a web page that has a CSS generated face. The face is changeable based on sentiment analysis of speech picked up through a microphone dropped from a drilled inside hole in the roof to an outside one.

We decided to start refurbishing this area with an internet of things smorgasbord. The first of our many internet of things implementations to be placed there is a mannequin with a mounted tablet on its head displaying a web page that has a CSS generated face. The face is changeable based on sentiment analysis of speech picked up through a microphone dropped from a drilled inside hole in the roof to an outside one.

The process of using software to facilitate all this is fairly simple and involves just two steps.

- Make a PageNodes flow that takes in speech, assigns a sentiment score, creates a Meshblu connection and publishes useful JSON objects to that server through a websocket connection.

- Make a site that produces a CSS face, textbox, and subscribes to the Meshblu server that PageNodes is publishing JSON objects to through another websocket connection, with Javascript functions that can alter the face and textbox based on said JSON objects.

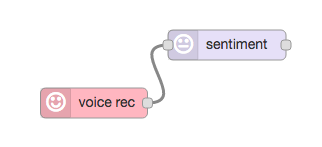

#####The PageNodes Portion First we make the voice rec and sentiment analysis nodes.

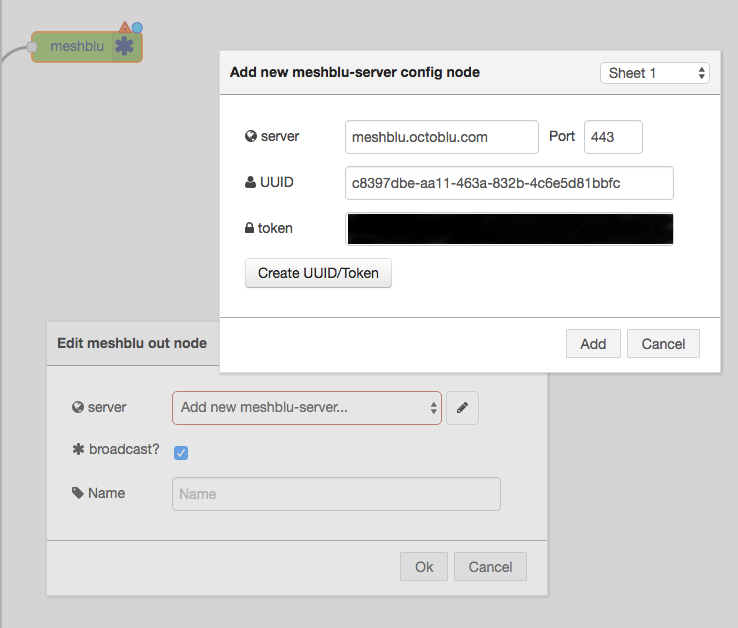

Second we add a MeshBlu server configuration and identify it using the “Create UUID/Token” button.

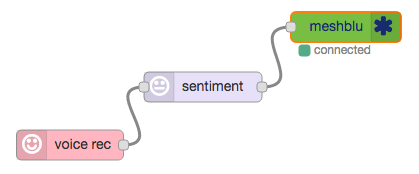

And that’s it!

#####The Site Portion

HTML

We start by making an HTML skeleton like so:

<!doctype>

<html>

<head>

<title></title>

<link rel='stylesheet' href='styles.css'></link>

</head>

<body>

<script src='main.bundle.js'></script>

</body>

</html>div.mouth.angry{}The CSS specifics for all the face components are on my Github.

That said, there is a CSS tool that is important to mention here about the animation process of all these face components that happens when changing them per mood: transitions.

transition: all 600ms ease-in-out;.textBox{

/*General positioning rules and size of text*/

}

.textBox.angry{

color: #CC0000;

text-shadow: 2px 2px 2px rgba(0,0,0,1);

}

.textBox.mellow{

color: #FFFF99;

}

.textBox.ecstatic{

color: #33FF33;

}JavaScript

Next, in main.js there is a current sentiment value variable that changes from 0 (never lower) to 30 (never higher) based on addition of the integer score given from the sentiment node in our PageNodes flow.

var currentSentiment = 15;function setMood(mood){}

function addSentiment(inputScore){}

function decideFace()setMood(decideFace())document.getElementById('mouth').className='mouth ' + mood;

document.getElementById('leftbrow').className='brows left ' + mood;<div class='mouth mellow' id='mouth'></div>

<div class='brows left mellow' id='leftbrow'></div><div class='mouth happy' id='mouth'></div>

<div class='brows left happy' id='leftbrow'></div>var meshblu = require('meshblu');

var conn = meshblu.createConnection({});conn.on('ready', function(data){

console.log('ready', data);

conn.subscribe({

uuid: 'c8397dbe-aa11-463a-832b-4c6e5d81bbfc',

types ['broadcast']},

function(err){

console.log('subscribed', err);

});

});That UUID property should look familiar because it is the same series of characters from when we made that meshBlu server in PageNodes:

Looking back at our constructed meshblu node we see its the same as our UUID property:

Now you can do something every time a new message is listened to by the web page like so:

conn.on('message', function(data){

addSentiment(data.sentiment.score)

setMood(decideFace())

inputText(data.payload)

});And there you have it!

An interactive web page that takes in speech from its PageNodes JSON object delivery and changes its face emotion accordingly.

What's Being Considered Next?

- Hooking up servos to the mannequin's arms to move them based on the speech input from outside using Johnny-five.

- Connecting Philips Hue lightbulbs and talking to them through PageNodes to change color based on sentiment as well.

- Putting in a modestly rare (but not too rare so as to break the bank) Pepe that when my name is spoken replaces whatever current face is showing on the webpage and disappears after five seconds and returns the CSS face back.

- Make more IoTing happen like saying "dance party" to turn on a strip of a neon color LED arrangement, replaces the CSS face with a disco ball gif, and play a banger from mounted speakers.

We are always open to ideas, leave a comment or tweet at us. -LJ