Jarvis - an Amazon Echo clone in your browser with PageNodes

Virtual assistants are all the rage and the big tech companies all have them now. Siri, Google, Cortana, and of course Alexa on the Amazon Echo. Having a verbal conversation with your computer is the future!

Amazon recently put together a tutorial on building an Echo clone with a Raspberry Pi, a mic, and speakers. It's a great way to get started building the bridge from Star Trek in your living room.

Modern web browsers also have built in voice capabilities. Let's explore what we can do with those.

WebSpeech API

The WebSpeech API provides SpeechSynthesis and SpeechRecongintion objects to use in secure web pages. PageNodes.com, our web-based IoT platform, makes this very simple to use.

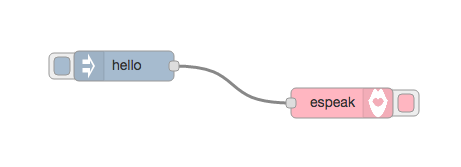

Brett Warner implemented the SpeechSynthesis API with a PageNodes output node. Drop one on to the page, click the deploy button, send it a text payload, and turn up your computer's speakers.

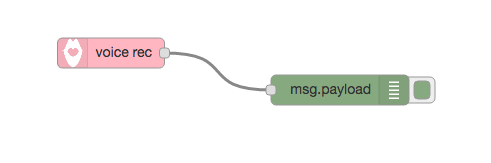

Following that, Sam Clark created a voice recognition input node using the SpeechRecongintion API. Drop one on to the page and it will start emitting messages as you speak to it.

While I was entertaining myself by having the computer say bad words, Alyson Zepeda decided to actually make something useful.

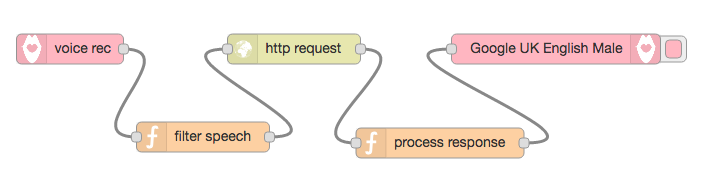

After maybe five minutes of hacking, Aly created:

Jarvis

To make your own Jarvis virtual assistant, you're going to want to have PageNodes take speech input, do something interesting with the text it collects, then have it say something in response with a cool English accent.

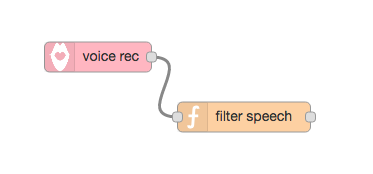

First, let's only process speech input that starts with 'jarvis'. Well let's process text that at least sounds like it starts that way. Chrome's voice rec is pretty good, but not perfect.

Inside of the funciton node we have access to the excellent lodash library to help make the code simpler:

var parsed = msg.payload.split(" ");

var match = ['jarvis', 'service', 'nervous', 'travis'];

if(parsed && parsed.length > 1 && _.includes(match, parsed[0].toLowerCase())){

//we're talking to jarvis!

parsed.shift();

var query = parsed.join(' ');

//let's do something with the query here:

msg.payload = 'Something cool!';

return msg;

}

return null;If the function node returns something other than null, it will flow on to the next node.

Next, let's have the function node put together the parameters we need and then to send a web request to the google search engine.

Setup your HTTP request node to be a GET request and have a url of: https://www.googleapis.com/customsearch/v1

We'll modify the code from above to set parameters for the web request.

var parsed = msg.payload.split(" ");

var match = ['jarvis', 'service', 'nervous', 'travis'];

if(parsed && parsed.length > 1 && _.includes(match, parsed[0].toLowerCase())){

//we're talking to jarvis!

parsed.shift();

var query = parsed.join(' ');

msg.params = {

q: query,

cr: 'US',

cx: '003265628676327108248:v26ein-gdfq',

num: 10,

key: ' - My Web Key - '

};

return msg;

}

return null;The cx property in the msg.params is the custom google search engine we're using. If you'd like to create your own, you can do it here: https://cse.google.com/cse/all

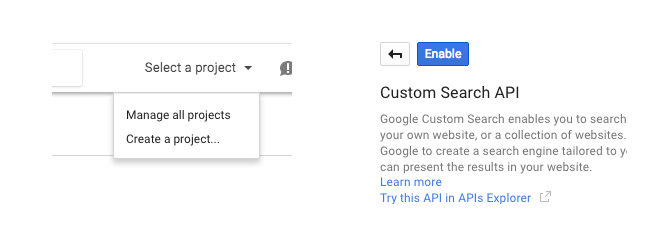

To obtain a web key you'll need log into the google API console and create a project. You'll also need to click the credentials link to to make the web key for that project.

Finally, we'll take the response, pull out the text we want, and feed it into the SpeechSynthesis node.

The response we get is in JSON format already, we just need to grab the first item then send the new payload along.

if(msg.payload && msg.payload.items){

answer = msg.payload.items[0].snippet;

if(answer.length > 150){

//speech sometimes doesn't like huge strings.

answer = answer.substring(0,150);

}

msg.payload = answer;

return msg;

}

return null;And there you have it. A browser-based Jarvis virtual assistant!

PageNodes.com gives you the ability to export and import flows. The flow we just built can be loaded by simply importing this json and editing the function node to insert your own web key:

[{"id":"4721875f.f2b108","type":"voice rec","z":"f9740f89.10f39","name":"","x":93,"y":68,"wires":[["f1138943.020728"]]},{"id":"f1138943.020728","type":"function","z":"f9740f89.10f39","name":"filter speech","func":"var parsed = msg.payload.split(\" \");\nvar match = ['jarvis', 'service', 'nervous', 'travis', 'target'];\n\nif(parsed && parsed.length > 1 && _.includes(match, parsed[0].toLowerCase())){\n parsed.shift();\n var query = parsed.join(' ');\n \n msg.params = {\n q: query,\n cr: 'US',\n cx: '003265628676327108248:v26ein-gdfq',\n num: 10,\n key: ' -- my key --'\n };\n return msg;\n \n}\n\nreturn null;","outputs":1,"noerr":0,"x":226,"y":156,"wires":[["e7caa57f.ff5178"]]},{"id":"e7caa57f.ff5178","type":"http request","z":"f9740f89.10f39","name":"","method":"GET","ret":"txt","url":"https://www.googleapis.com/customsearch/v1","x":317,"y":68,"wires":[["26353cb7.750f34"]]},{"id":"26353cb7.750f34","type":"function","z":"f9740f89.10f39","name":"process response","func":"if(msg.payload && msg.payload.items){\n answer = msg.payload.items[0].snippet;\n \n if(answer.length > 150){\n answer = answer.substring(0,150);\n }\n \n msg.payload = answer;\n \n return msg;\n}\n\nreturn null;","outputs":1,"noerr":0,"x":463,"y":162,"wires":[["5895c041.a6c08"]]},{"id":"5895c041.a6c08","type":"espeak","z":"f9740f89.10f39","name":"","variant":"Google UK English Male","active":true,"x":579,"y":68,"wires":[]}]Extensions

Getting google results by talking to a computer is a great first step, but that might be something your phone can do already.

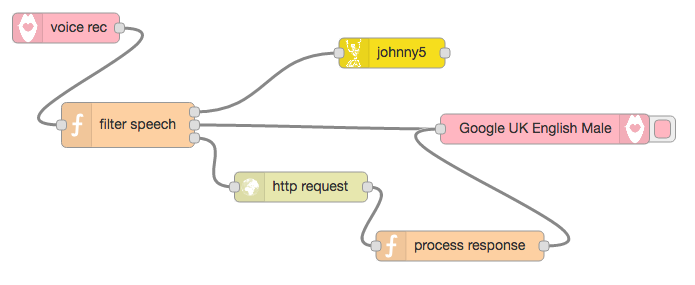

Custominzing our filtering function lets us connect to other webservices or even hardware devices!

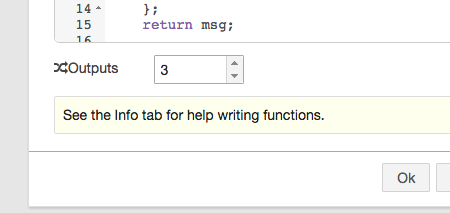

All we have to do is change the number of outputs our filtering function has, and respond with an array of answers to match those outputs.

Our filtering function might look something more like:

var parsed = msg.payload.split(" ");

var match = ['jarvis', 'service', 'nervous', 'travis', 'target'];

if(parsed && parsed.length > 1 && _.includes(match, parsed[0].toLowerCase())){

parsed.shift();

var query = parsed.join(' ');

if(_.startsWith(query.toLowerCase(), 'robot')){

msg.payload = 'destroy!';

return [msg, null, null];

}

else if(_.startsWith(query.toLowerCase(), 'i love you')){

msg.payload = 'I love you too.';

return [null, msg, null];

}

else{

msg.params = {

q: query,

cr: 'US',

cx: '003265628676327108248:v26ein-gdfq',

num: 10,

key: ' -- my key --'

};

return [null, null, msg];

}

}

return null;We can then connect the corresponding outputs to different nodes.

Here's a quick video of using Jarvis:

The Internet of Voice Commands

This is still just the beginning...

Here's a few more ideas to help you get started:

- Use Web USB and johnny-five with Jarvis for some home automation.

- Use the Web Camera node to tell Jarvis to snap a selfie for you.

- Connect to language processing services like wit.ai.

- Use our local storage nodes and have Jarvis be your personal note taking service.

- Send your voice commands to a meshblu node and into Octoblu's IoT cloud.

- Use our geolocation node to attach your location for localized search results.

- Send POST requests to IFTTT's maker channel.

- Connect ALL THE THINGS to ALL THE THINGS !

We'd love to hear what you're building. Leave a comment or tweet at us.

-Luis